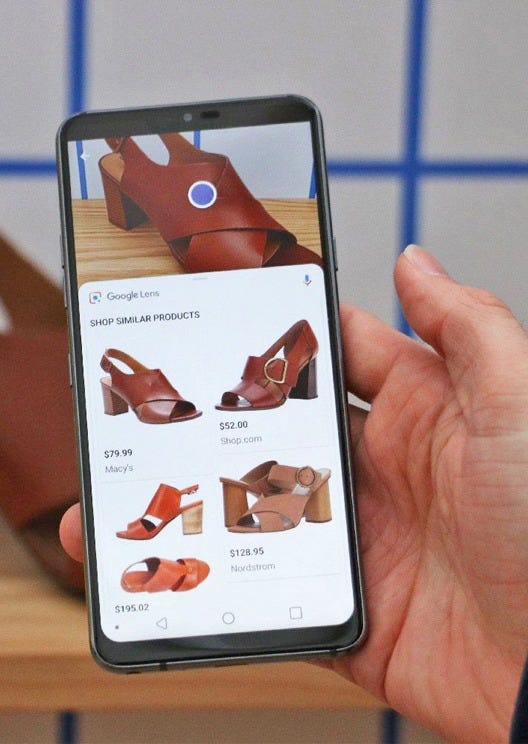

I’m a huge fan of thrift and vintage shopping. As a result, I was a pretty early adopter of Google Lens - Google’s visual search tool. When I find something cool on the racks at Goodwill, I take a picture of the item and search in Google Lens. This tells me more details about the value and history of what I’m holding. Once, the coat I found turned out to be from a luxury brand I was unfamiliar with, but was reselling for hundreds of dollars on eBay. On another occasion, I found a designer-labeled piece, but upon sleuthing in Google Lens, I discovered it was from a diffusion line with a department store - basically worthless. While I don’t resell my thrift finds, Google Lens adds context around the value of an item that I find helpful when shopping.

My once-peaceful thrift trips are no more. My local shops are packed with people, phones in hand, searching for the best resale opportunities to upload to Depop, eBay and other platforms. On the flip side (pun intended), thrift and consignment stores are using visual search to better price their wares, so unicorn finds like my designer coat are becoming more rare. While thrifters have always researched the provenance of their finds, visual search is a one-click, dummy-proof way to understand the market value of pretty much any consumable item. You don’t need to know about fabric composition. You don’t need to know about designer labels. You just need a phone with a camera.

Beyond the thrift store, this technology has implications for how we shop.

Visual search is getting better

I’ll leave the detailed explanation to the experts, but here’s a quick summary of how visual search works:

AI visual search technology relies on advanced image recognition and machine learning techniques to understand the content of the images and match them with relevant items, products, or information from a database.

Machine learning ensures visual search becomes faster, more accurate, and increasingly personalized over time, aligning better with user needs and providing more intuitive experiences. Without getting too deep into the technical details, a few factors drive the continuous improvement of these tools:

More data - many of these tools (like Google and Amazon) are getting billions of monthly searches. This expands their dataset and improves accuracy.

Signals from users - The quality of results is measured in part via user feedback - when you engage with the search results, e.g. if you end of purchasing something, you’re signaling that those results were relevant. The algorithm can then adapt based on that learning.

Technical advances in the underlying models powering these tools

Advances in hardware and processing ability

Taken all together, consumers have observed a very fast improvement in the power and accuracy of visual search tools in the past few years.

Visual search usage: Limited but growing fast

Unsurprisingly, with improvements in visual search capability comes increased adoption. While overall adoption is still relatively low, it’s growing fast. Like many new technologies, adoption is stronger among younger users. A few key stats from eMarketer:

While only 10% of US adults regularly use visual search, 42% are at least somewhat interested in using the tool, as of August 2024.

Younger consumers are most likely to use visual search—22% of consumers ages 16 to 34 have seen or bought an item via visual search compared with 17% of 35- to 54-year-olds and 5% of consumers ages 55 and older.

Visual searches worldwide increased 70% YoY, per Amazon.

Google gets 20 billion Lens searches a month, 4 billion of which are related to shopping. This compares to ~1.5 billion monthly searches that start on Google Shopping.

Pretty impressive!

More players are releasing visual search products

In the summer and fall of 2024, I saw a lot of announcements about new visual search products hitting the market. The retailer in me immediately thought that platforms and brands wanted to get these out ahead of holiday - when many consumers are breaking from their typical consumption patterns to search for gifts in new ways. These products span the markets of tech, marketing, and ecommerce. They range from Apple’s offering, which will embed visual search technology in the native phone camera, to startups like Phia, which leverage visual search for the specific use case of shopping and price comparison.

Because I’m focused on ecommerce, I’ll take that lens when considering the impacts of visual search. But this technology goes far beyond shopping.

Consumer-facing visual search: a market map

I labeled this chart “consumer-facing” because there is a whole other world of tools that businesses use that leverage visual search technologies. For example, resale platforms use visual search to authenticate goods. There are also a lot of emerging players building visual search tools that plug into brand websites. But that will need to be the subject of a future post!

All the major big tech players are experimenting with visual search - no surprise

In retail, only big players have developed this feature so far. This makes sense, because searching via image is most useful if the retailer/marketplace has a vast/frequently changing catalog. It must be costly to license/build the core technology to enable high-quality visual search. So many retailers are probably taking a “wait and see” approach.

I’m particularly excited about visual search’s role in resale. The transformational nature of the tech for this industry in particular is why we’re already seeing several players investing in this.

I’m also so excited to follow emerging players, like Phia, and Perplexity’s shopping tool.

Visual Search: Changing how we shop

I initially wrote back in October about how visual search could impact brands. I’m fleshing out those ideas more now.

Reshaping product search - the move away from filters

Ecommerce traditionally has had a very linear search architecture. For example:

Women’s > Dresses > Mini > Black

It’s easy to see the limitations of this approach. Perhaps there’s a perfect navy blue mini dress that I would love, but I’ll never see it with this filter structure. That gap represents money left on the table for brands. Selecting from a long list of filters is also annoying as a shopper, especially on mobile, where the UX is often subpar. Often, shoppers opt for the “endless scroll”, browsing all dresses, to be sure they don’t miss a good option. While brands can design their navigations and collection pages to prioritize best sellers, the level of personalization is pretty limited. Shopping online can feel like a chore.

I discussed in Part I how Google’s AI search tools are enabling a shift to more conversational search - for example, you might type “I want a dark colored dress for a semi-formal event” and see curated options. Visual search is another tool for brands to strengthen their customer funnel, making it easier for customers to find relevant things and keeping them engaged on-site.

Reduced pricing power for sellers

While in-season items often have an MSRP that is consistent across retailers, any time an item is marked down at one retailer, the others are at a major disadvantage unless they match. Consumers were always savvy about comparison shopping across major retailers (especially with increased popularity of Google Shopping), but now they can look across eBay and other resale sites too. This will further erode the position of department stores and advantage any digitally-savvy player who can effectively monitor their online listings.

An opportunity for smaller brands

Many marketing technology trends aggregate gains to the biggest companies that can spend the most money on paid formats and run strategies at scale. But I believe visual search is positioned to advantage smaller, independent brands through enhancing discoverability.

More brands in consideration set: If I see that an unfamiliar brand makes something nearly identical to what I’m searching for, I’m likely open to try it - especially if there are reviews and other forms of social proof that I can reference. Before Google Lens, I might check around on my favorite brand sites to see what was out there, but the consideration set would be more limited.

Reduced brand loyalty: Driven by the above factors, consumers’ loyalty to a given brand is challenged by increased visibility of relevant options.

More robust resale/used good market

I think a lot about the resale market because the majority of my closet is secondhand and vintage. I believe that resale, already a vastly growing area of ecommerce, will be a beneficiary of this technology.

Increased share of wallet from resale/used items: With visual search, it’s easier to find an item without knowing the brand and style specifications. This will bring more traffic to resellers. It will also force them to make their pricing across platforms more competitive.

Imperative for brick-and-mortar thrift and consignment to move online: Visual search will unlock more traffic to secondhand listings, which creates an opportunity for brick-and-mortar resale businesses to move into ecommerce, or at least list items online to drive foot traffic to their physical stores.

Increased interest and reduced barriers to selling used items: With an increased ability to see the value of your items on the resale market, there will be an increased interest in selling. For the past few years, growth of resale has been constrained on the supply side - it’s annoying to create listings as a single seller and manage them through to fulfillment, all for a relatively modest payday. New tools are simplifying listings across multiple platforms, and these tools themselves leverage AI and image recognition to reduce the manual data input required to list items.

I am gobsmacked that The Real Real hasn’t yet released any visual search features. This is a huge miss when compared to eBay’s and Poshmark’s visual search offerings. I’m a big TRR fan and I don’t want to see them miss the boat on this!

Personalized marketing opportunities

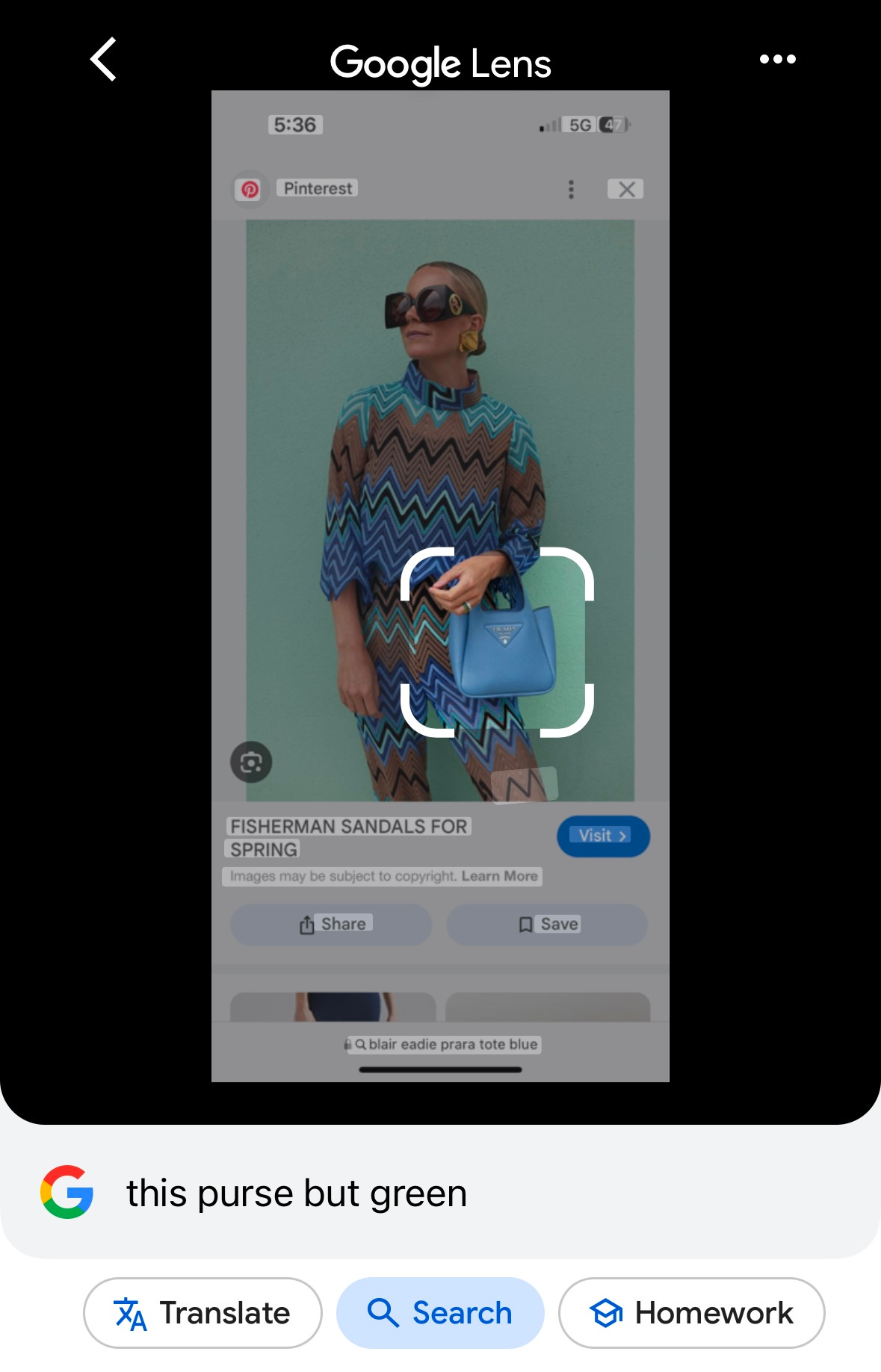

Visual searches contain a lot more context than a search term. In the past, I may have searched for “blue Prada tote bag.” Now, I can search this image:

This image contains a lot of valuable context - it shows that I like the style blogger Blair Eadie. It indicates that I might like 70s style and bold prints. It gives clues as to other brands and styles I might gravitate to. Capturing these insights could allow tech companies and brands to better tailor recommendations to me beyond a single query.

The future is here - Multi-Modal search

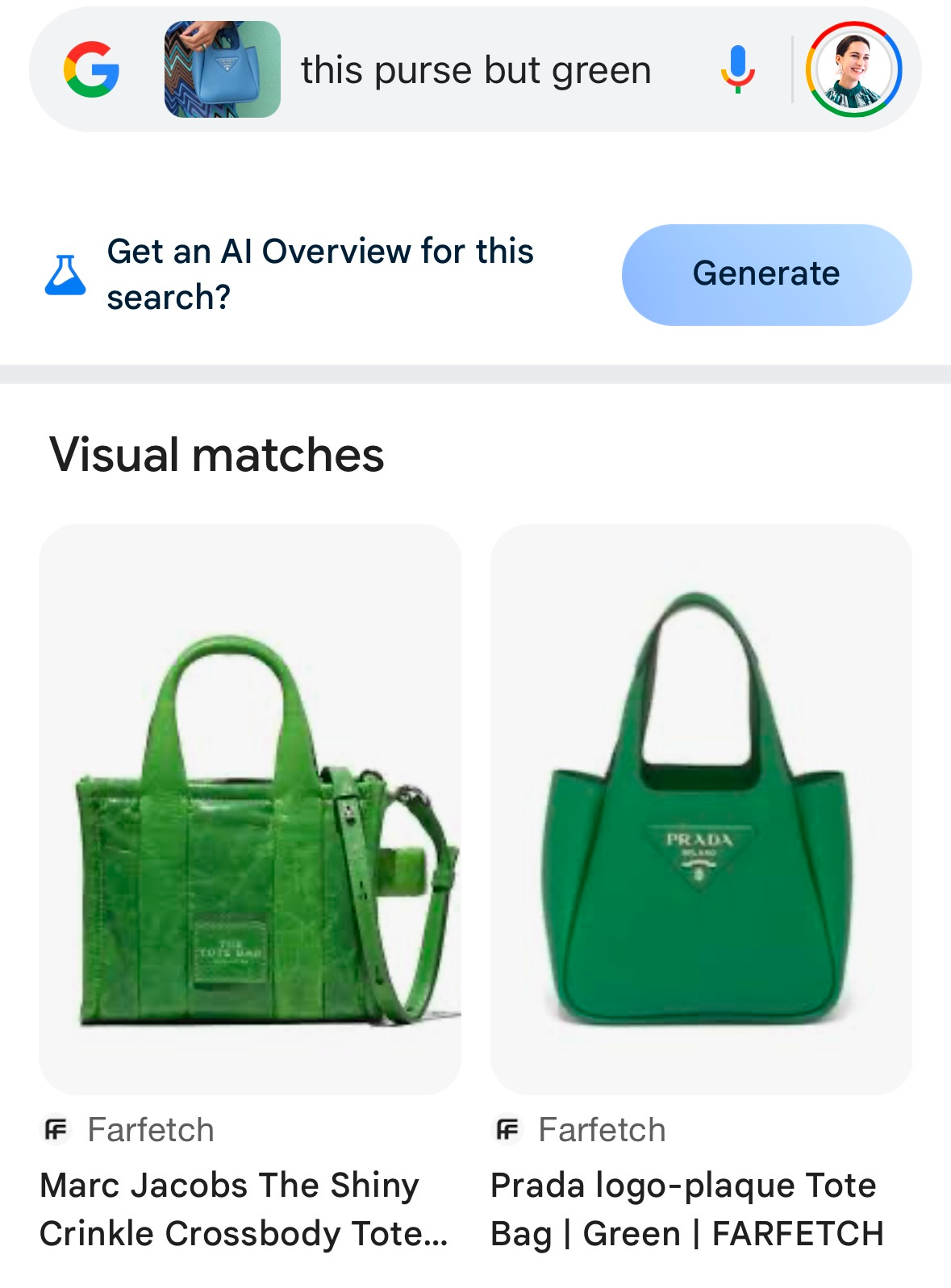

A Google Lens update from October 2024 sheds light on how we’ll all be shopping in a few months:

Lens search is now multimodal, a hot word in AI these days, which means people can now search with a combination of video, images, and voice inputs. Instead of pointing their smartphone camera at an object, tapping the focus point on the screen, and waiting for the Lens app to drum up results, users can point the lens and use voice commands at the same time, for example, “What kind of clouds are those?” or “What brand of sneakers are those and where can I buy them?”

Of course, I had to try it.

Using the same image above, I prompted Lens to focus on the purse and added the text “this purse but green.” The search did turn up the exact item in green. Unfortunately, it was sold out, so I will not be blowing $3k on a cute bag today.

*Side note - to improve this product, Lens could prioritize retailers where the item isn’t sold out.

Conclusions

In Part I of this series, I wasn’t 100% sold on the utility of AI Overviews, at least for commerce. As a shopper, I’m sold on visual search - it’s very intuitive and returns relevant results a lot of the time. Multi-modal search opens up the potential for even more relevant results. I’m also excited about how this technology may increase discoverability for independent brands and secondhand products and sellers. Will paid placements in these visual search tools start watering down the experience? More than likely, yes. But for now, I’m a fan.

Sources I found useful while researching for this piece

- ’s “For Dummies” explainer on LLMs (thank you for the super clear explanation!)

Retail Brew: Google Lens gets a precise tweak before the holidays to push shopping - October 2024

TechCrunch: AI-powered visual search comes to the iPhone - September 2024

AdWeek: Poshmark Takes on Google and TikTok With Gen AI Visual Search Update - October 2024

Wired: Google’s Visual Search can now answer even more complex questions - October 2024

ICYMI

Product discovery and AI: Part I

In The Devil Wears Prada, Miranda Priestly, played by Meryl Streep, has an iconic monologue about the power of the fashion industry - exemplified by the cerulean sweater. She shares how a creative design decision made at a luxury fashion house - the decision to use a certain shade of blue - trickles down to the high street, where, without even choosing …

Thanks for reading, and stay curious!

Melina

This is a great breakdown! I was also an early adopter of visual search for shopping secondhand and it’s evolved so much in a few short years. 100% agree about wishing TRR would launch this feature, they’re the #1 place I shop. I don’t want them to get left behind other marketplaces and think the monetary investment would be offset by incremental revenue.

Love this tons of inspiration and guidance here for what is coming next at Phia ❤️❤️❤️